COBOL defects’ paper coauthored by Phase Change scientists wins IEEE distinguished paper award

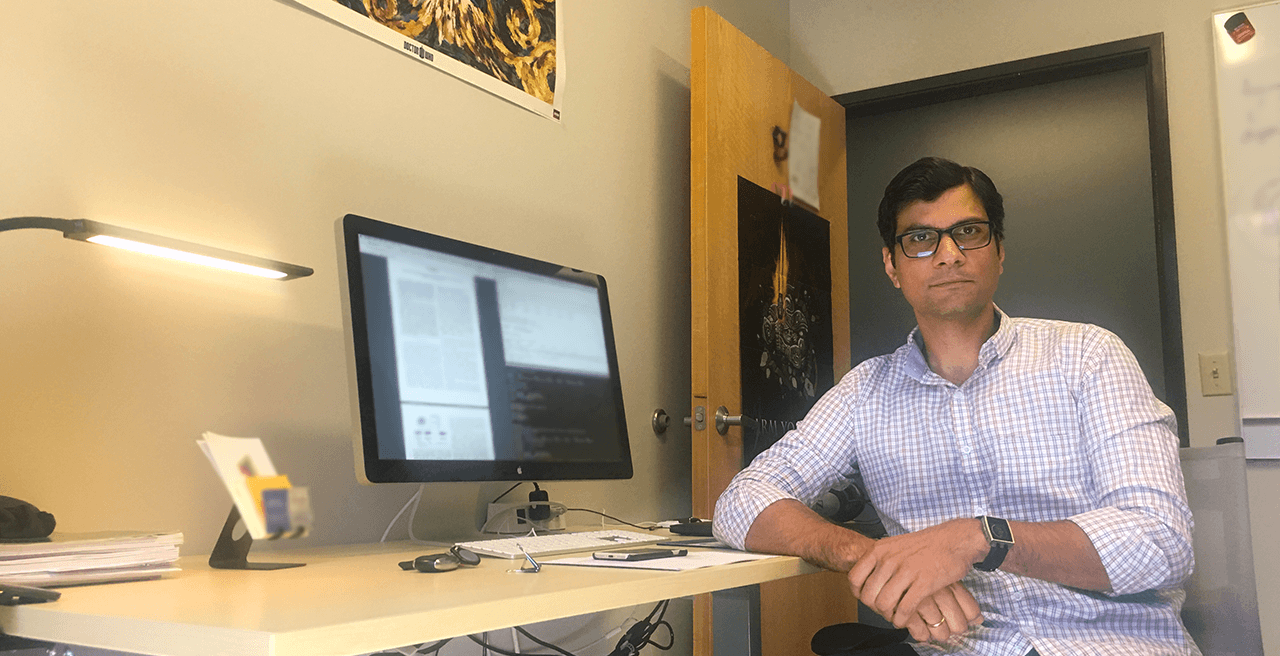

A technical paper co-authored by current and former Phase Change research scientists, and presented at the 2021 annual ICSME event, won a Distinguished Paper Award from the IEEE Computer Society Technical Council on Software Engineering (TCSE). The paper, “Contemporary COBOL: Developers’ Perspectives on Defects and Defect Location,” was co-authored by current Phase Change Senior Research […]